Introduction

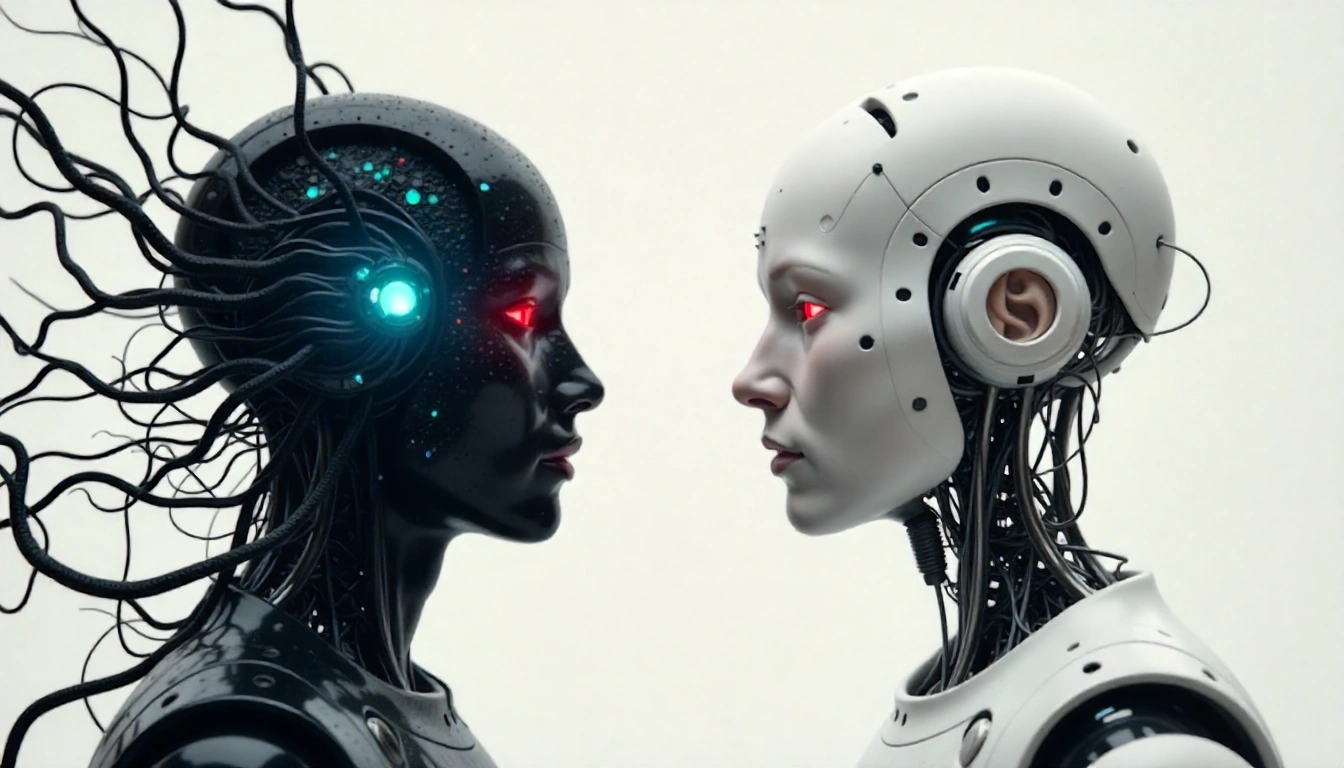

In recent times, with the extensive use of generative AI chatbots, cases of chatgpt-induced psychosis are on the rise. The more people include chatgpt into their daily, personal lives, the more they tend to believe whatever it says. Online claims about chatgpt induced psychosis affecting people’s relationships have significantly increased over time (Desk, 2025). This blog will explore whether chatgpt psychosis is real or is it just misunderstood?

What is Chatgpt psychosis?

First and foremost, to clarify, chatgpt psychosis is not a clinical diagnosis and neither is it recognised by the Diagnostic Statistical Manual (DSM). It is a popular phrase which has recently gained attention and refers to the delusional thinking patterns that have emerged amongst the users after using chatgpt for personal uses for a prolonged period of time.

Things started getting out of hand when the news of a Belgian man committing suicide after talking to a chatbot about climate crisis went viral in the year 2023 (El Atillah, 2023). This was merely one of the many cases that emerged which resulted from harmful and unethical AI practices in recent times. Since the beginning of life after Covid, several threads on reddit and tiktok came up where loved ones were reporting losing their partners to rabbit holes of spiritual mania, supernatural delusion, and arcane prophecy-all of it fueled by AI.

Clinical and Psychological View

Psychosis is a mental health condition characterized by a disconnect from reality, often involving hallucinations, delusions and disorganized thinking. Psychiatrists often caution that AI may re-inforce existing delusions within people but not create new delusions. In the past, people have developed delusions around television, media and religious themes, including God. Experts say that while it might not necessarily be chatgpt that is the main factor for these delusions, but rather the user’s vulnerability to the way chatgpt portrays itself.

Why It Feels So Real: Human-AI Interaction

To explore why cases around chatgpt induced psychosis have shot up, I would like to talk about the Eliza effect. Eliza was the first chatbot ever developed by Joseph Weizenbaum in 1966 which imitated a psychotherapist. Since then, the eliza effect refers to the phenomenon of humans projecting human traits like empathy,sentiment and experience on rudimentary computer programs with textual interface. While ChatGPT itself is a language model, users often interact with it as if it were a person, leading to both positive and negative consequences. Moreover, chatgpt being a generative AI chatbot, it can mimic the empathy and tone of humans which makes it feel so alive and believable. Moreover, chatgpt also has a tendency to agree with whatever the users say, hence making the users more vulnerable to develop severe delusions (Gupta, 2025). Recent circumstances of increasing individualism promoting loneliness can also play a role in people making AI chatbots their “safe space” rather than consulting a psychologist.

So, What Should We Do?

For vulnerable users, the AI being used by them or the loved ones around them should remain cautious about obsession, secrecy and withdrawal from real life.For therapists dealing with clients with existing delusions or like behaviour should ask clients about AI use and meaning-making. Most importantly, for the AI developers, a chatbot should be thoroughly tested for its responsible use and non-harmful behaviours before it is released. The testing should take place even after it’s released to prevent future cases of induced delusions or harmful prompts. This requires a detailed exposition on its ethics, disclaimers and ability to detect and deal with crises.

Conclusion

While chatgpt induced psychosis isn’t a formal diagnosis, it is a real experience for some. As AI becomes more human, we must equip ourselves with the understanding of how humans relate to it. Awareness, not alarmism is the way forward as AI gains traction in the recent and future.